TDD vs. No Test: A Tale of Two Development Journeys

Hossein Bakhtiari

—Apr 07, 2025

Have you ever reached a point in a project where making a small change feels like defusing a bomb? That’s what happens when tests are missing — and TDD aims to fix that.

In this post, we’ll follow two parallel development paths:

- One using Test-Driven Development (TDD).

- One following a "no tests" approach with manual verification.

This is a story many of us have lived through, and it highlights how both approaches scale over time. The goal isn’t to convince you with theory, but to show you the long-term consequences of each approach through real, relatable experiences.

We are all aware that Agile development is an incremental approach to software development. Consequently, instead of planning for an extended period, we only plan and develop for a short time. This process offers numerous benefits, including faster failure, which leads to faster learning, a shorter time to market, and a higher quality of software.

While we do not wish to delve into Agile in detail, incremental software development from a technical perspective implies that we either lack knowledge or do not wish to be concerned about the exact system’s future state. We have a goal or a broad vision, but we do not plan and consider the details upfront. This poses a significant technical challenge, as the design and code should be structured to allow the system to evolve and adapt over time.

Some of you may be familiar with the situation where developers receive challenging change requests and express dissatisfaction, stating, “This was not planned or discussed during our planning process and requires a significant amount of effort.” However, if we are honest with ourselves, this is precisely why we refer to software as “soft to change.” This is the exact objective behind Agile.

Working in an Agile project entails working on a feature with certain assumptions that may or may not be accurate in a few iterations ahead. Therefore, you should have a method of verifying that your actions will fulfill the final goal of the software and delight the customer. How do we verify this? We verify it by testing the application frequently.

I’m a fan of stories, so let’s explore the concept of developing software using two distinct approaches: Test-Driven Development (TDD) and the No Test approach. To facilitate a fair comparison, we’ll assume that each feature we intend to develop has an average of five business-related tests to conduct.

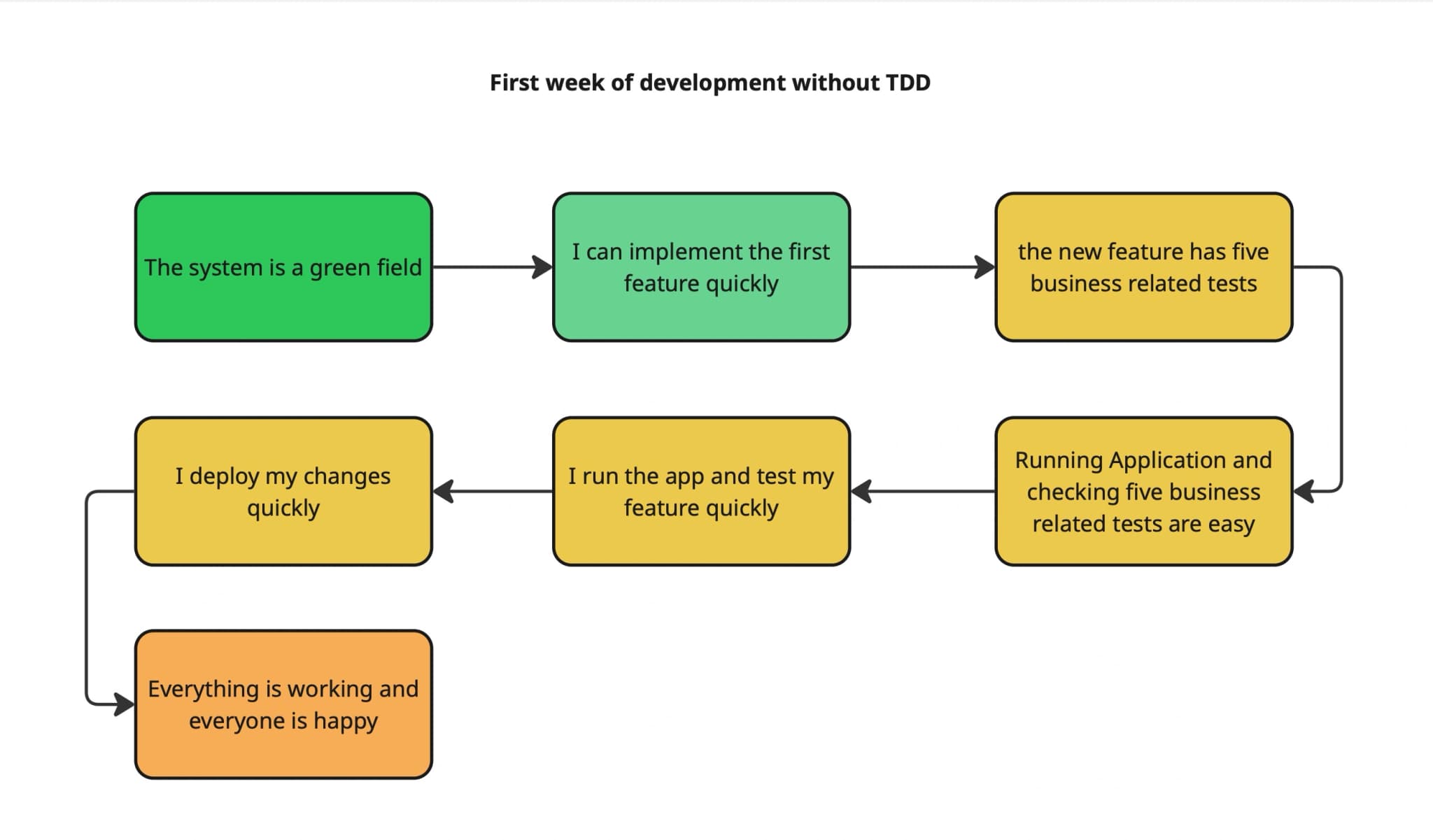

First Week of Development Without TDD

The project begins with a brand-new codebase. There are no constraints, no technical debt, and everything feels fresh. The team starts with excitement — it’s a greenfield system.

As a developer, I quickly implement the first feature. There are no automated tests to write, so I focus entirely on coding the functionality. Things move fast and feel productive.

Once the feature is complete, I mentally list out the five main business scenarios that need to be verified. These represent the core expectations for the feature to be considered complete.

I run the application locally and manually test each of these five scenarios. Since the code is fresh and simple, the checks are quick, and the logic is still top-of-mind.

Everything appears to work, so I proceed to deploy the changes. There’s little ceremony involved — just push and release.

The deployment is successful. The application works, and stakeholders are happy. From the outside, everything seems smooth and efficient.

The following diagram illustrates this development process from start to finish.

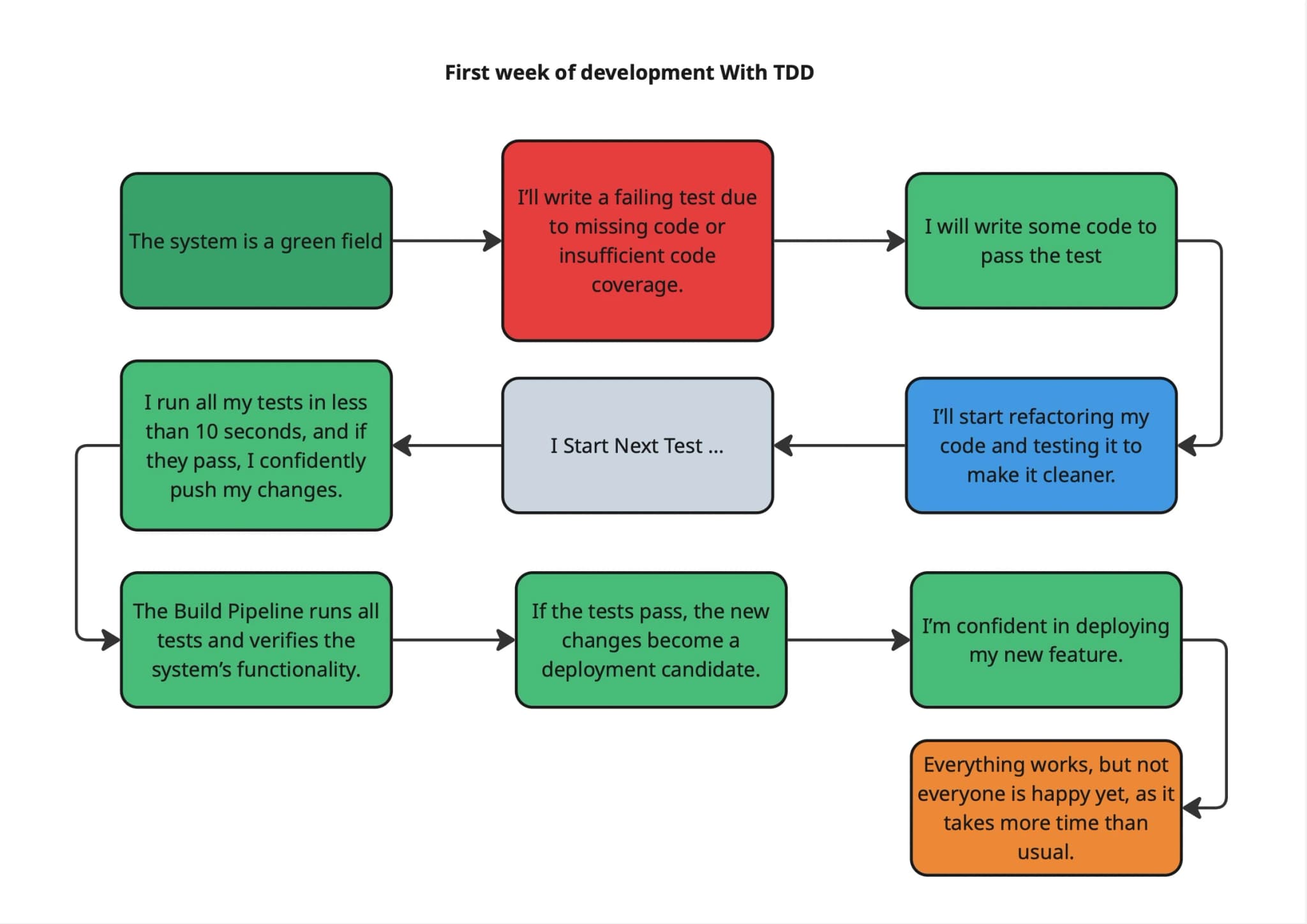

First Week of Development With TDD

The project begins as a greenfield system. With no legacy code or technical constraints, there is complete freedom to build things the right way from the start.

Following the TDD approach, I begin by writing a failing test. The test expresses the desired behavior, but it fails because the feature isn’t implemented yet, or the code doesn't yet meet the requirements.

With the failing test in place, I proceed to write just enough code to make it pass. The focus is on functionality — no polishing or extra logic at this point.

Once the test passes, I take the opportunity to refactor the code. This step ensures the solution is clean, maintainable, and adheres to design principles — all while relying on the tests to keep behavior intact.

With the code clean and the test passing, I start the next test. This cycle — write a failing test, make it pass, then refactor — becomes the engine of development.

I can run the entire test suite in just a few seconds. The tests are fast, isolated, and give me confidence that the system works as expected. When everything passes, I push my changes.

The build pipeline automatically runs all the tests and verifies that the system’s functionality is intact. If the tests pass, the changes are marked as ready for deployment.

I now have high confidence in deploying the new feature, knowing that it has been developed through small, validated steps and thoroughly tested.

Everything works correctly in production. However, not everyone is happy — the process feels slower during the first week, especially compared to rushing features out without automated tests. But this is an intentional trade-off. The team is investing time now to build safety, speed, and confidence for the weeks to come.

The diagram below illustrates this development process using TDD.

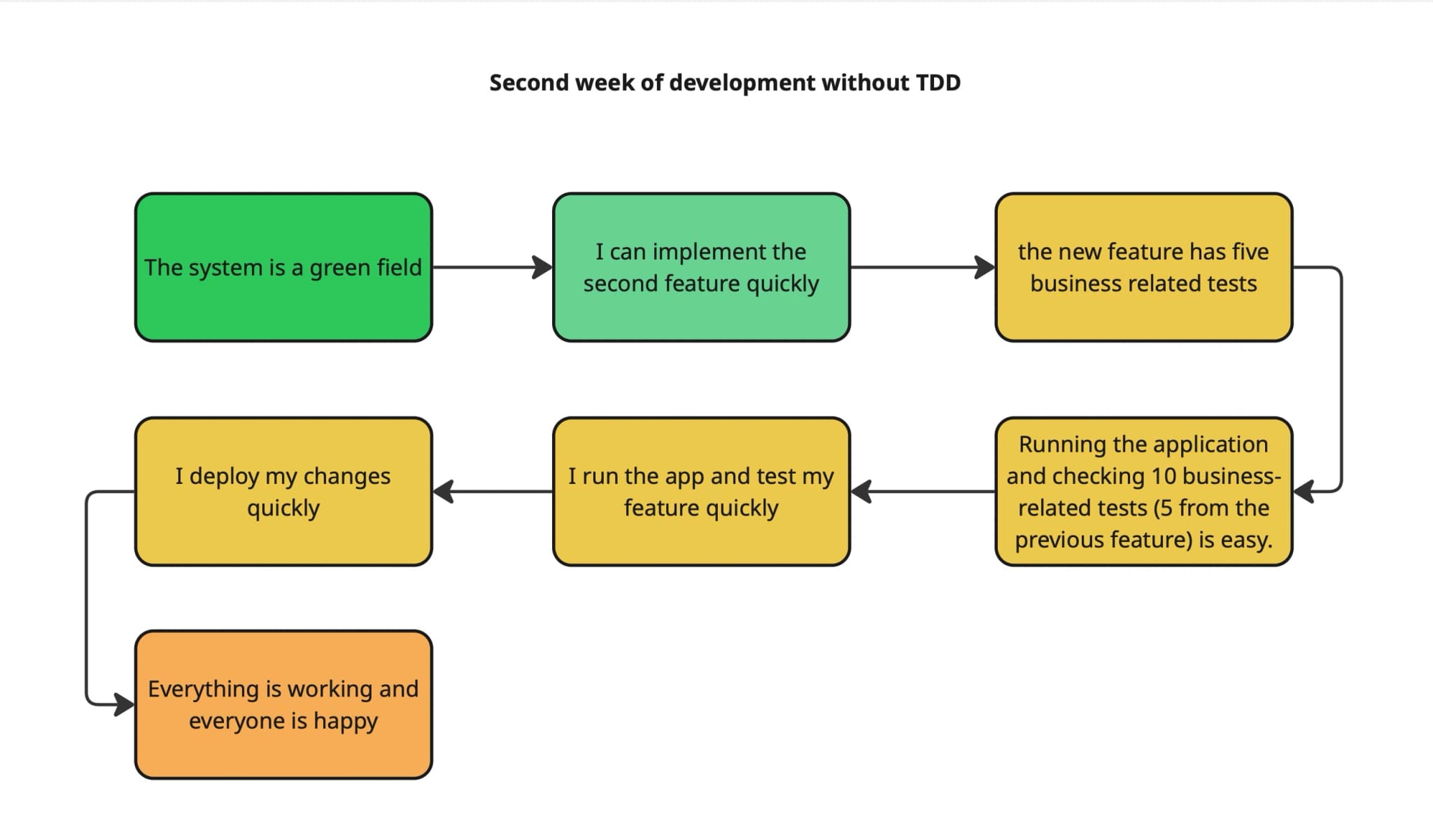

Second Week of Development Without TDD

The system is still relatively new, and development continues without automated tests in place. It’s the beginning of the second week, and the codebase remains manageable.

As a developer, I move on to the next task and implement the second feature quickly. With no upfront testing requirements, I focus entirely on building the new functionality.

Once the second feature is complete, I identify five new business-related tests to verify. These represent expected behaviors for the feature and are used as a checklist during testing.

In order to ensure the application is still functioning properly, I now need to test not only the five new cases but also the five from the previous feature. This brings the total number of checks to ten.

I launch the application and manually verify all ten business scenarios. It's still doable — the system is small enough, and I remember how the features should behave.

After confirming that everything seems to be working, I proceed to deploy my changes. The process feels fast and lightweight, as it did the previous week.

Once deployed, the application continues to function correctly. The team and stakeholders are happy with the progress and results.

The following diagram captures this experience during the second week of development without TDD.

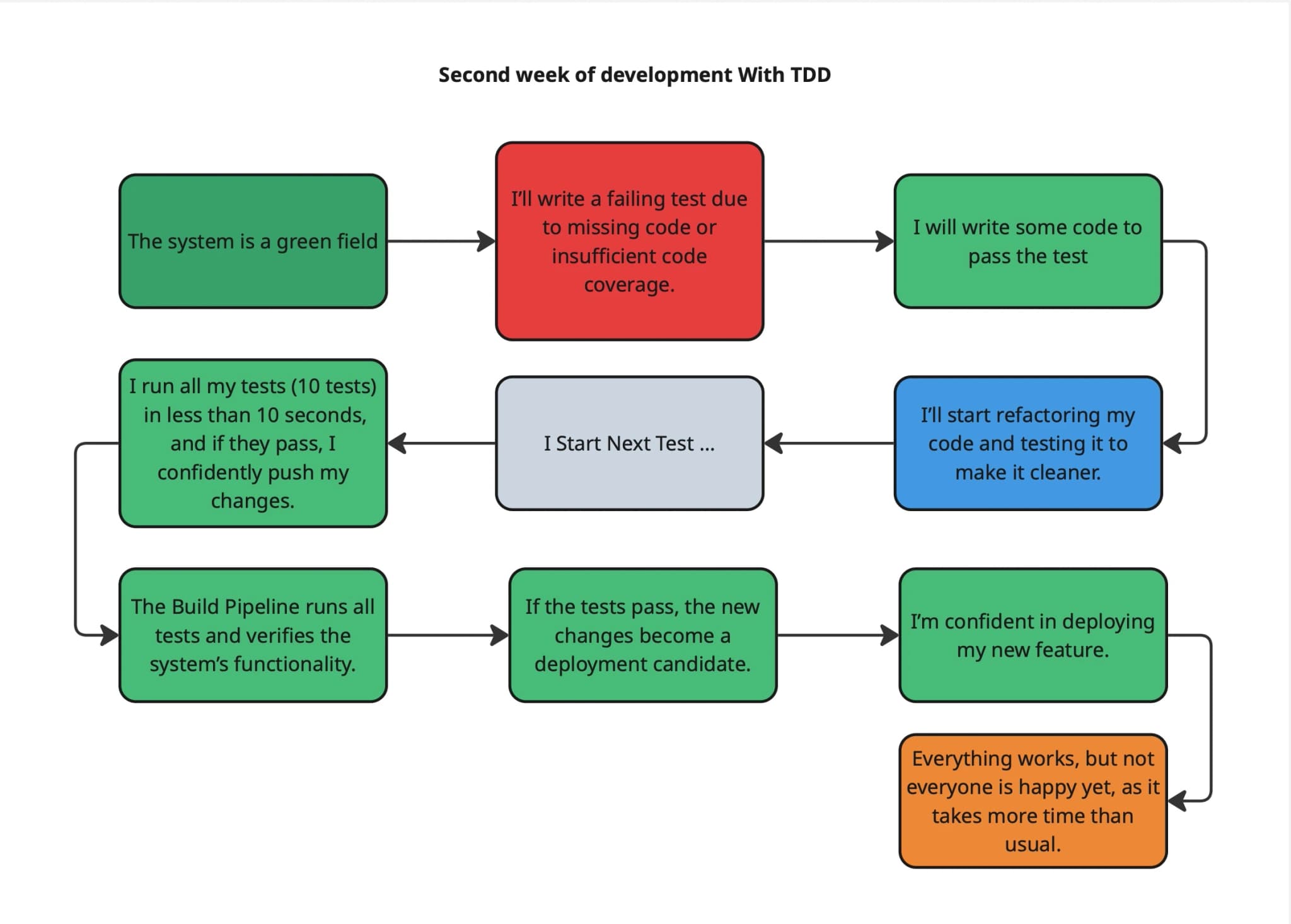

Second Week of Development With TDD

As the second week begins, the system is still a greenfield project. Although the codebase is growing, it's clean, tested, and well-structured thanks to the discipline of Test-Driven Development followed from the beginning.

Development continues using the same cycle: I begin each task by writing a failing test. This test expresses the expected behavior of the next small increment of functionality. It fails, as intended, because the corresponding implementation doesn’t exist yet.

Next, I write just enough code to make the failing test pass. This keeps development focused and lean, avoiding unnecessary code or complexity.

Once the test passes, I refactor the code. I improve readability, structure, or remove duplication — all while relying on the test suite to ensure I haven’t broken anything. The test remains green throughout.

This red-green-refactor cycle continues feature by feature. After completing one, I start the next test and repeat the loop.

By now, the test suite includes 10 tests. Yet I can still run all of them in under 10 seconds. This gives me immediate feedback, and if everything passes, I confidently push my changes.

Once pushed, the build pipeline runs automatically. It executes all tests and confirms that the system remains stable and functional.

If the tests pass in CI, the new changes are considered a deployment candidate. I know that what I've built meets the requirements and hasn't introduced regressions.

I feel confident deploying my changes. The safety net of tests — written before the code — makes this possible.

Everything works correctly in production. But there’s a noticeable difference: some stakeholders begin to question the time taken. The process feels slower compared to the rapid progress of the first week. What they may not see yet is that this steady, structured investment is setting the foundation for long-term speed, reliability, and maintainability.

The following diagram visualizes this development process using TDD during the second week of the project.

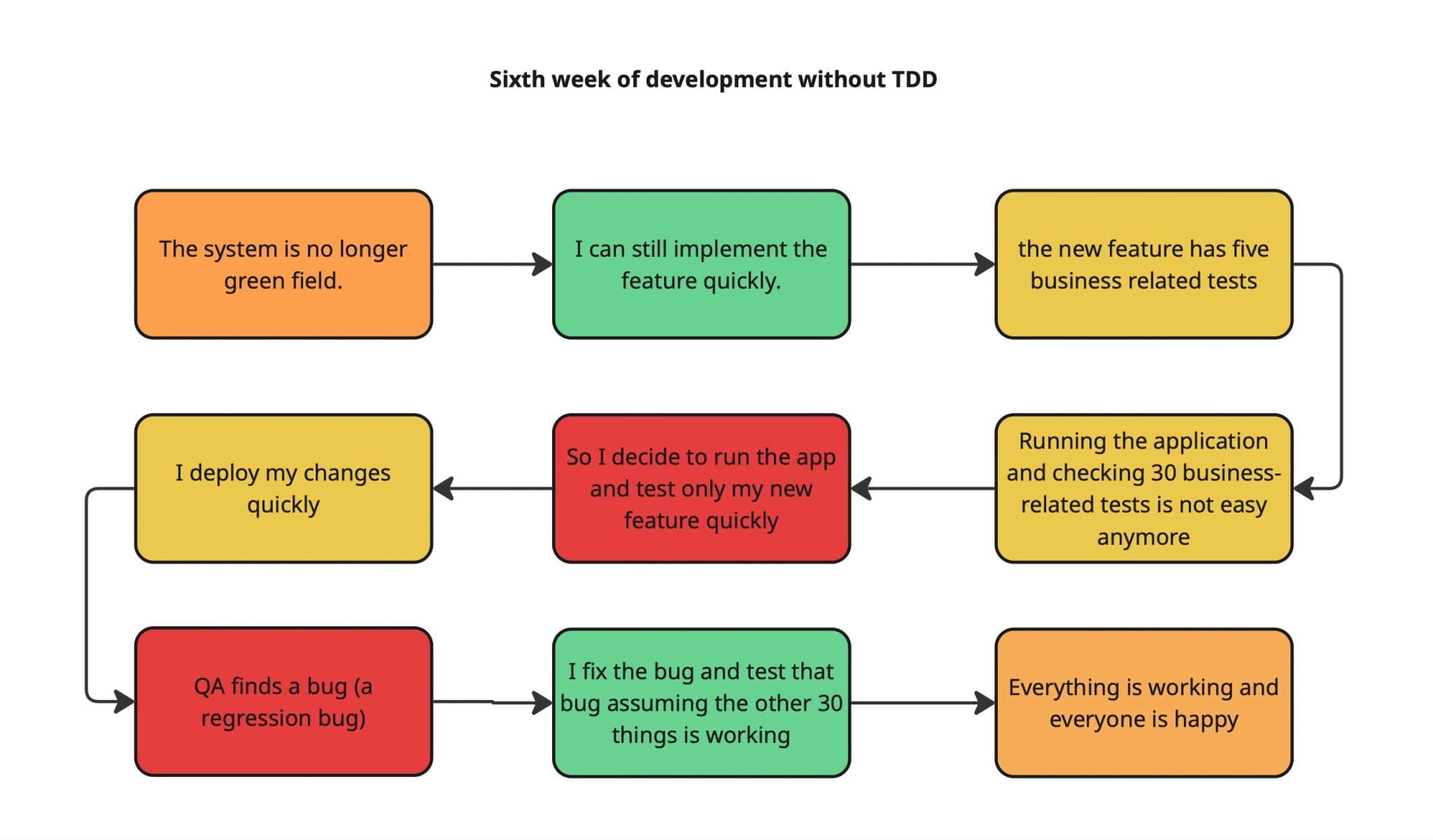

Sixth Week of Development Without TDD

By the sixth week of the project, the system is no longer a greenfield. The codebase has grown, and so has its complexity. While development continues, it no longer feels as light and effortless as it did in the early days.

I begin working on a new feature and, like before, I’m able to implement it quickly. My coding speed hasn’t changed much — writing the logic for the new functionality still feels familiar.

As usual, I identify five business-related tests for this new feature. But now, I also need to account for all the previous business scenarios from earlier features — thirty in total.

Running the application and manually checking thirty scenarios is no longer easy or fast. It’s time-consuming and increasingly error-prone. With pressure to deliver quickly, I make a decision: I will only test the new feature and skip retesting the others for now.

I run the app, check that my new feature appears to work, and deploy the changes.

Shortly after, QA discovers a bug. It’s a regression — a previously working behavior has been broken. Since I didn’t reverify earlier features, this issue slipped through unnoticed.

I go back to the code, fix the bug, and test the specific scenario that failed. I assume the rest of the system is still functioning correctly, though I don’t verify all thirty scenarios again.

Eventually, everything appears to be working, and the application is stable once more. Everyone is happy — but the process is starting to show cracks. What was once fast and smooth is now becoming fragile and risky.

The diagram below illustrates how the development process unfolds without TDD as the system grows in complexity by the sixth week.

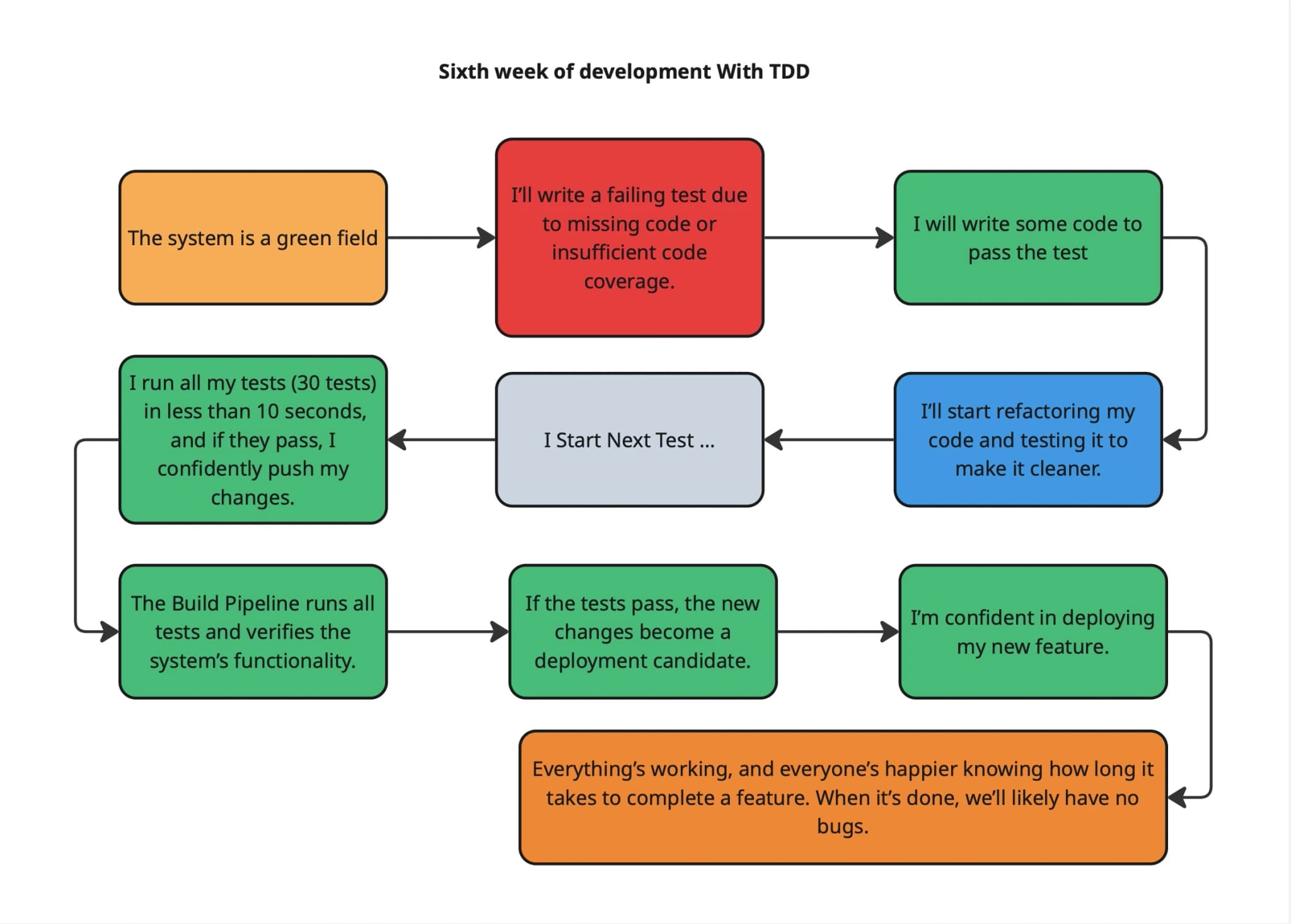

Sixth Week of Development With TDD

By the sixth week, the system has matured, but development continues with the same disciplined flow established from the beginning.

I still follow the same red-green-refactor cycle — write a failing test, implement just enough code to make it pass, then refactor with confidence. Nothing about this has changed.

What’s different now is the scale of the system. There are 30 tests in place — yet I can still run all of them in under 10 seconds. This gives me rapid feedback and the confidence to push changes without hesitation.

The CI pipeline runs all tests automatically. If they pass, the new changes are ready for deployment.

I deploy with certainty, knowing I haven’t broken anything. The tests cover all the critical paths.

This level of confidence isn’t just for me — the entire team is more at ease. Everyone knows how long features will take and trusts that when a task is marked “done,” it won’t come back as a bug later. It may take a bit longer than rushing, but it’s stable, predictable, and nearly bug-free.

The diagram below captures this steady, reliable process in the sixth week of development using TDD.

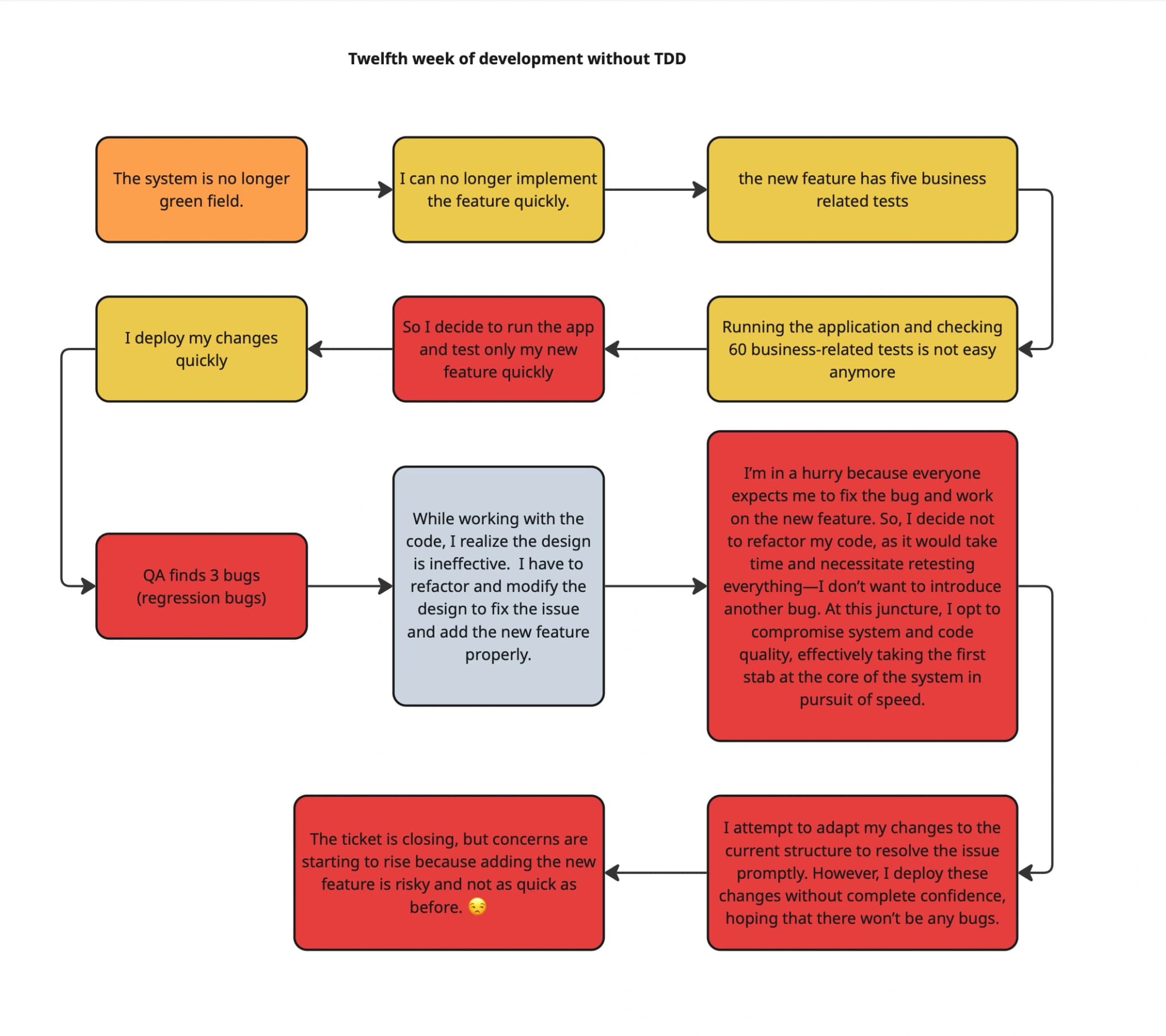

Twelfth Week of Development Without TDD

By the twelfth week, the system is clearly no longer a greenfield. The once-fast development pace has slowed. Implementing new features isn’t as straightforward anymore — the design has aged, and complexity has grown.

Despite this, I proceed to add a new feature. It comes with five business-related scenarios that I need to validate. But now there are 60 business rules in total, including those from previous features. Verifying them all manually has become difficult and time-consuming.

Under pressure to move fast, I test only the new feature and skip retesting the rest. I deploy my changes quickly, as I’ve done before.

QA discovers three regression bugs. I start fixing them but, during the process, I realize the underlying design is ineffective. It needs to be refactored to implement the feature correctly and cleanly.

I begin refactoring, but deadlines loom. Everyone expects the bugs to be fixed and the new feature delivered quickly. Refactoring properly would mean retesting everything — which I know I can’t do manually without significant time.

So, I compromise. I avoid refactoring, patch the issue into the existing structure, and make the minimal changes needed to resolve the current problem. I deploy these updates without full confidence, hoping no further bugs will arise.

The ticket is closed. But it’s clear that things aren’t like before. Adding new features has become risky and uncertain. Confidence is fading, and concerns are rising across the team.

The diagram below illustrates how the development experience has changed in the twelfth week without TDD.

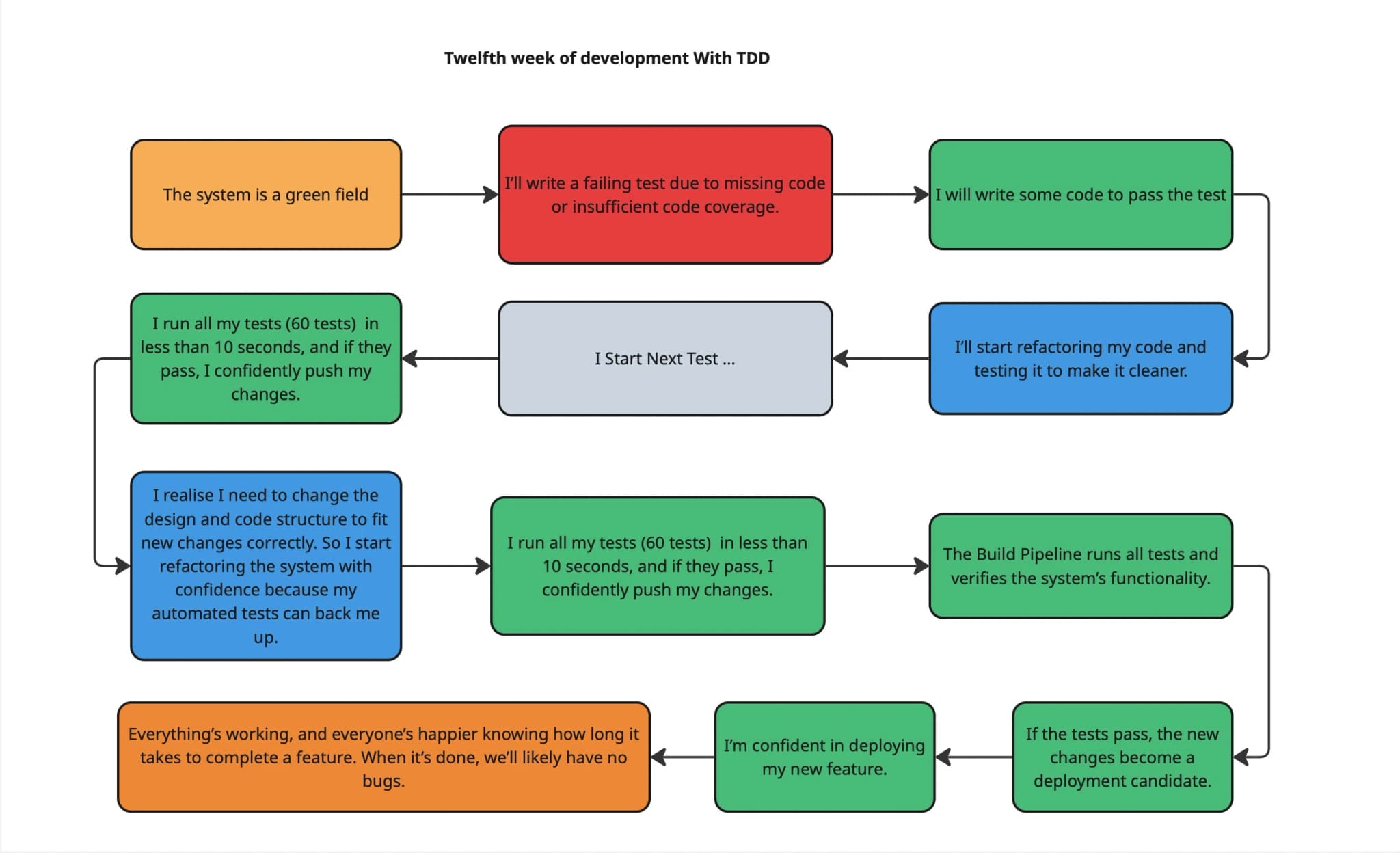

Twelfth Week of Development With TDD

By the twelfth week, the system has grown considerably, and new features sometimes demand significant structural changes. During implementation, I realize the existing design needs to evolve to support the new requirements properly.

Instead of avoiding change, I confidently begin refactoring the system. My test suite, now 60 tests strong, gives me the safety net I need to restructure the code without fear of breaking existing functionality.

After making the necessary design adjustments and completing the feature, I run the full test suite — still taking under 10 seconds — and push my changes when everything passes.

The CI pipeline picks up the changes, verifies the system’s functionality through automated tests, and promotes the feature as a deployment candidate. I deploy with certainty, knowing everything has been validated.

Stakeholders are not only satisfied with the result but have come to value the predictability of the process. They understand how long a feature will take, and more importantly, they trust that once it’s done, it’s truly done — with little risk of post-deployment issues.

The following diagram illustrates this reliable and scalable development process by the twelfth week with TDD.

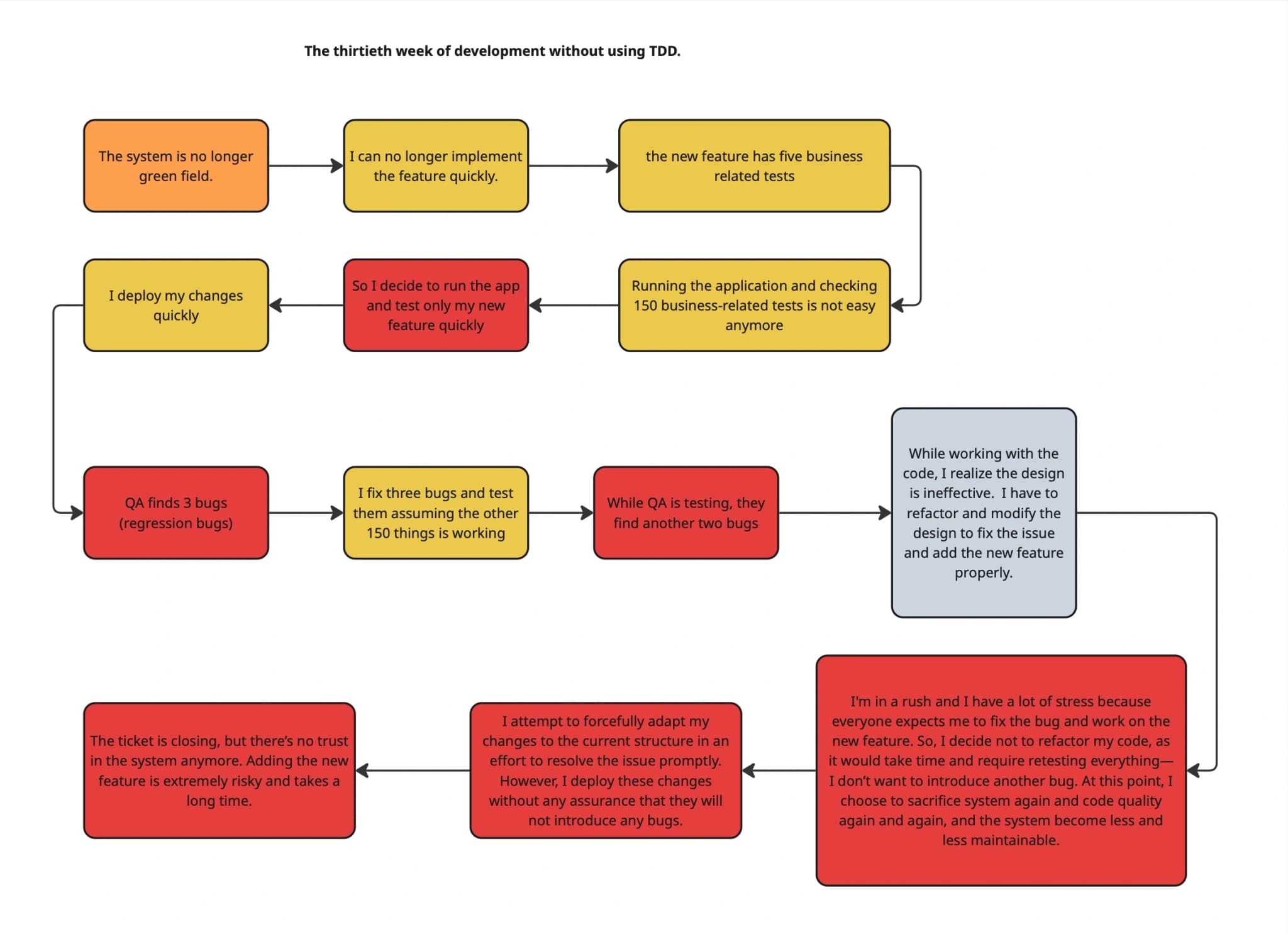

Thirtieth Week of Development Without TDD

At this point, the system has grown large, and the earlier pace of development is long gone. New features can no longer be implemented quickly — even small additions feel heavy.

I add a new feature, which includes five new business rules. But now, the total number of business-related scenarios has reached 150. Verifying them all manually is no longer feasible, so I choose to test only the new feature.

I deploy the changes quickly, as usual, but QA finds three regression bugs. I fix them, testing only the parts I’ve touched, assuming the rest of the system is still stable.

While QA continues testing, two more bugs are found. Meanwhile, I realize the design itself has become difficult to work with. Adding this feature properly would require reworking parts of the system.

I begin refactoring with that intention — but as pressure builds, I abandon the plan. Time is tight, expectations are high, and I don’t want to risk breaking more things. So instead, I adapt my code forcefully into the existing structure.

I push the changes, fully aware they haven’t been properly validated. I hope for the best and brace for more issues.

Eventually, the ticket is closed. But the cost is clear: there is little trust left in the system. Every change now feels risky. Progress is slow, stress is high, and the system has become fragile and difficult to maintain.

The diagram below reflects the painful cost of skipping TDD as a project matures into its thirtieth week.

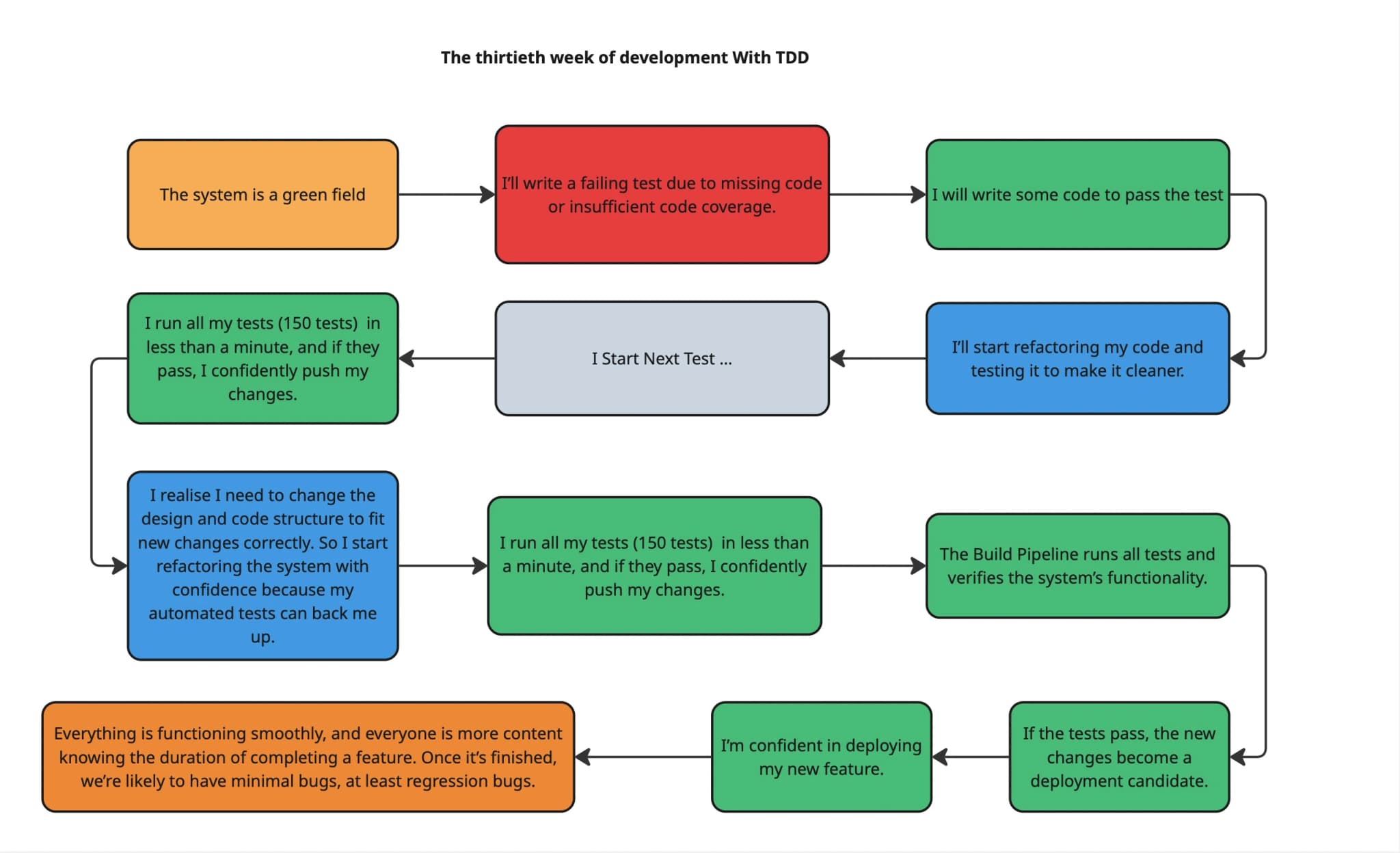

Thirtieth Week of Development With TDD

By week thirty, the system has matured significantly. The test suite now contains 150 tests — and yet, I can still run them all in under a minute. This efficiency allows me to make changes and validate them rapidly.

When a new feature requires adjustments to existing design or structure, I don’t hesitate to refactor. The safety net provided by automated tests gives me the confidence to improve the system continuously, even as it grows in size and complexity.

I complete the feature, validate it through the tests, and push with assurance. The pipeline confirms stability, and the change is promoted for deployment.

Teamwide, there's a shared sense of clarity and calm. Everyone knows how long it typically takes to deliver a feature. And when it’s delivered, we rarely see regression bugs — allowing us to focus on progress, not repair.

The diagram below reflects the stable and maintainable development process enabled by TDD at scale.

Summary & Comparison: A Reflection on 30 Weeks With and Without TDD

Across the span of 30 weeks, we explored how a software system evolves with and without the support of Test-Driven Development (TDD). To keep things consistent, we assumed that each new feature introduces an average of five business-related behaviors, though in real-world systems, this number can be significantly higher — and vary widely depending on complexity.

In a team setting with multiple developers, the total number of business rules and interactions increases quickly. As developers contribute simultaneously, the risk of unintentional regressions and design degradation grows. Without fast, reliable feedback loops in place, verifying behavior becomes increasingly manual and error-prone.

This is where automated tests — especially when written before the code as TDD encourages — start to act not just as guards against regressions, but also as living documentation of the system's behavior. For a new developer joining a TDD-based system, the test suite can serve as a map of how the system works, what’s expected, and how changes should behave. In contrast, entering a legacy system with no reliable tests often means relying on tribal knowledge, reading through cluttered code, and cautiously making changes — sometimes with fear of breaking unknown parts.

That said, TDD isn’t a silver bullet. It’s one part of a broader Agile ecosystem. Writing good tests is a craft — fragile, overly-coupled tests can be more painful than helpful. Having tests doesn’t guarantee a bug-free system, and poor test design can give a false sense of security.

Additionally, not all tests are equal. In this comparison, we focused mainly on unit tests, which validate small pieces of logic quickly and in isolation. But achieving real confidence often also involves integration tests, contract tests, UI tests, and performance checks — each playing a role in the full spectrum of quality assurance.

Finally, it's important not to frame this as TDD vs. no TDD in a binary, competitive way. TDD offers structure, safety, and clarity — especially as complexity grows — but its value multiplies when combined with clear design, clean code, pair programming, continuous integration, and healthy team practices. It's not about dogma; it's about choosing disciplined practices that scale with your codebase and your team.

In essence, TDD isn’t the destination — it’s a vehicle. When used well, it helps teams move faster, with more confidence, and build software that’s easier to change — not just now, but months and years down the road.